Optimizing Resource Allocation for Docker Containers on unRAID: A Step-by-Step Guide

Facing resource allocation challenges on unRAID? I’ll share how I managed Docker containers while running more than 40 Docker containers on unRAID. Discover how to set memory and CPU limits, optimize core usage, and prioritize containers to keep your server running smoothly, even under heavy loads.

Managing resource allocation across Docker containers can be challenging, especially when running multiple services on an unRAID server. I faced this issue firsthand while setting up my first unRAID server with Paperless-NGX and Nextcloud, trying to import thousands of documents and simultaneously migrating 12TB of data. Through trial and error, research, and community feedback, I developed an approach to effectively manage and limit resource usage across all containers.

In this blog post, I’ll share my process for setting resource limits for Docker containers on unRAID to ensure a smooth and efficient operation, even under heavy loads.

Don’t want to handle this on your own? If you need help or prefer assistance, you can schedule a remote call/meeting for personalized support.

TL;DR

To optimize resource usage in unRAID Docker containers:

- Identify the recommended RAM and CPU cores for each service.

- Use Docker's

Extra Parametersfield to set memory and CPU limits. - (Optional) Pin containers to all but one core, leaving one core dedicated to unRAID.

- (Optional) Adjust CPU share priorities for critical containers.

- Monitor the resource usage and update the configured limits as needed.

Introduction

Running multiple Docker containers on unRAID can lead to resource contention, where certain services consume more CPU or memory than others. This can cause performance bottlenecks to the point that unRAID itself might not be responsive anymore.

When I first encountered this issue, my unRAID server was overwhelmed by the demands of Paperless-NGX and Nextcloud, among others. After researching and discussing with the unRAID community on Reddit, I found a method to effectively distribute resources and ensure stable performance across all containers.

Here’s the detailed approach I use for each container I add to my unRAID system.

Step 1: Identify Resource Requirements

Before setting up a Docker container, it's essential to determine its resource needs. Usually, you'd do that by looking up the official hardware requirements and recommendations, but you'll soon find that there simply are none for many of the services you are trying to set up.

That's why I typically start by asking ChatGPT the following question:

Question: "What's the recommended amount of RAM and CPU cores to run [Name of Service]?"

For example, if ChatGPT suggests one and a half CPU cores and 4GB of RAM for a service, I use these values to configure the container.

This provides reasonable starting values that can be adjusted to fit your needs, as explained in the fifth step of this blog post.

Step 2: Configure Resource Limits in Docker

Once I know the required resources, I configure the Docker container's resource limits using the "Extra Parameters" field in unRAID. Here’s how I set the limits:

--memory="4g" --memory-swap="4g" --cpus=1.5Explanation:

--memory="4g": Limits the container’s RAM usage to 4GB.--memory-swap="4g": This limits the total memory (RAM + SWAP) the container can use to 4GB, assuming you've set up unRAID's Swapfile plugin or configured a swapfile in some other way.--cpus=1.5: This setting determines how many available CPU resources a container can use. For instance, if the host machine has two CPUs and you set--cpus=1.5, the container is guaranteed at most one and a half of the CPUs. This is the equivalent of setting--cpu-period="100000"and--cpu-quota="150000".

For more details about the different parameters Docker supports, check out the documentation on resource constraints.

If you'd like to verify that your new settings are in effect, you can connect to unRAID via SSH and then run docker exec -it <Container_Name> /bin/bash to connect to the Docker container in question.

Within the container, execute yes > /dev/null to run the yes command that takes up exactly one CPU core.

If you set a limit of, let's say, 4 CPU cores by adding --cpus=4 as a parameter, you'd have to open 5 terminal windows and run the yes command in all of them. If everything is set correctly, the CPU load shown on the host using the command docker stats <Container_Name> in another terminal window should not exceed 400%.

Step 3 (Optional): Optimize CPU Core Allocation

When I first asked for a way to limit the CPU usage of each container in a post in unRAID's official Reddit community, most people recommended pining each container to specific cores, which is, in my opinion, not really a solution since it can lead to situations where your docker containers are using 100% of the pinned cores while your other cores might idle and have enough resources left that just sit around unused.

But that doesn't mean there is no reason to pin your containers (and VMs) to specific cores.

If you have X cores available in total, you could, for example, pin all containers and VMs to cores 2 through X, leaving core 1 dedicated to unRAID. This ensures that unRAID always has a core available, even if other resources are fully utilized.

Step 4 (Optional): Prioritize Critical Containers

Following these steps so far will, however, likely lead to overprovisioning of your RAM and CPU cores since you might have, for example, 32GB of RAM and a 20-core CPU available, but the sum of the limits you defined in step one and two of this post would require more than that.

For example, if you have three containers that can each use a maximum of 16 GB of RAM and 8 cores, they'd be allowed to use 48 GB of RAM and 24 cores combined, which you don't have in this example.

To ensure that, in that case, the most essential containers get access to the available CPU time first, you can use the --cpu-shares parameter to assign priority to containers.

The default value is 1024, and increasing this value gives the container a higher priority than containers with a lower number when CPU resources are scarce.

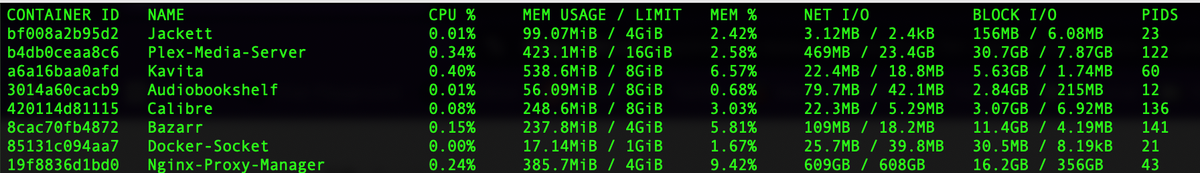

You can monitor the impact of these changes by running docker stats in the terminal. In this output, 100% CPU usage corresponds to one entire core.

Step 5: Ongoing Optimization

With all Docker containers limited to a specific amount of RAM and a specific number of cores they can use, you are off to a good start. Moving forward, you can adjust the configured limits based on each container's actual resource requirements on your system.

For example, suppose a container has a memory limit of 8GB but never exceeds 1GB during regular use. In that case, you might want to reduce the limit further since the container can clearly perform the required tasks within a lower limit.

On the other hand, if you notice that a service frequently uses all of the allocated resources and isn't performing as well as you'd like, you might want to increase its CPU and memory limits slowly until you find the configuration that ensures the container is running smoothly without negatively affecting your other services.

Conclusion

This approach has worked exceptionally well for me, allowing my unRAID server to handle demanding tasks without compromising performance.

If you’re struggling with resource allocation on unRAID, I encourage you to try this method and see how it improves your system’s performance.

If you’ve found this content helpful and would like to support the blog, consider donating by clicking here. Your support is greatly appreciated!